Heatmap

using Python

In this project, we utilize Python to create animations that visualize weather events in the USA.

In this project, we utilize Python to create animations that visualize weather events in the USA.

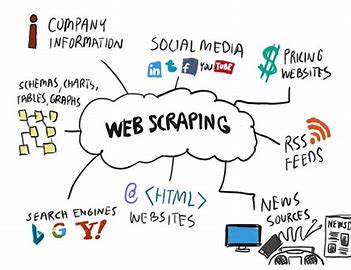

Web scraping involves using programming tools to extract data from websites. In this project, we use Python to download, save, unzip, clean, and export weather event data.

Artificial intelligence enables machines to learn from repetitive processes, adjust to new inputs, and perform human-like tasks. In this project, we apply a Convolutional Neural Network using the U-Net architecture to model weather events. We generate heatmaps, provide them as inputs, and use them to predict weather events based on the images.

Machine learning, a branch of artificial intelligence, automates analytical model building, enabling systems to learn from data, identify patterns, and make decisions with minimal human intervention. In this project, we apply regularized gradient boosting using XGBoost, a decision-tree-based ensemble machine learning algorithm.

In this project, we use Python for preprocessing weather events data.

The Poisson regression model is a Generalized Linear Model (GLM) used to model count response variables. In this project, we apply Poisson regression models to predict the number of weather events occurring within a given period.

Heatmaps are a valuable tool, particularly in insurance, due to their ability to provide rapid visual impact and convey complex information succinctly. As a crucial risk management tool, they embody the adage "a picture is worth a thousand words." In this project, we use Python to create heatmaps that visualize the occurrence of weather events in the USA over time.